eig ( eigenvectors=False) -> (Tensor, Tensor ) ¶ Self.double() is equivalent to self.to(torch.float64). In-place version of div() dot ( tensor2 ) → Tensor ¶ See torch.div() div_ ( value ) → Tensor ¶ See torch.dist() div ( value ) → Tensor ¶ Returns the number of dimensions of self tensor. See torch.diag() diag_embed ( offset=0, dim1=-2, dim2=-1 ) → Tensor ¶ Returns the address of the first element of self tensor. See torch.cumprod() cumsum ( dim, dtype=None ) → Tensor ¶ The copy will be asynchronous with respect to the host. non_blocking ( bool) – If True and the source is in pinned memory,.device ( vice) – The destination GPU device.Then no copy is performed and the original object is returned. If this object is already in CUDA memory and on the correct device, Returns a copy of this object in CUDA memory. See torch.cross() cuda ( device=None, non_blocking=False ) → Tensor ¶ If this object is already in CPU memory and on the correct device,

Returns a copy of this object in CPU memory. In-place version of cosh() cpu ( ) → Tensor ¶ In-place version of cos() cosh ( ) → Tensor ¶ The copy may occur asynchronously with respect to the host. non_blocking ( bool) – if True and this copy is between CPU and GPU,.src ( Tensor) – the source tensor to copy from.It may be of a different data type or reside on a copy_ ( src, non_blocking=False ) → Tensor ¶Ĭopies the elements from src into self tensor and returns Self tensor is contiguous, this function returns the self Returns a contiguous tensor containing the same data as self tensor. Propagating to the cloned tensor will propagate to the original tensor. Unlike copy_(), this function is recorded in the computation graph. See torch.btrisolve() cauchy_ ( median=0, sigma=1, *, generator=None ) → Tensor ¶įills the tensor with numbers drawn from the Cauchy distribution: See torch.btrifact_with_info() btrisolve ( LU_data, LU_pivots ) → Tensor ¶ See torch.btrifact() btrifact_with_info ( pivot=True) -> (Tensor, Tensor, Tensor ) ¶ te() is equivalent to self.to(torch.uint8). See also bernoulli() and torch.bernoulli() bmm ( batch2 ) → Tensor ¶ Self can have integral dtype, but :attr`p_tensor` must have Returns a result tensor where each \(\texttt)\). In-place version of baddbmm() bernoulli ( *, generator=None ) → Tensor ¶ See torch.baddbmm() baddbmm_ ( beta=1, alpha=1, batch1, batch2 ) → Tensor ¶ In-place version of atan() baddbmm ( beta=1, alpha=1, batch1, batch2 ) → Tensor ¶ In-place version of atan2() atan_ ( ) → Tensor ¶

See torch.atan2() atan2_ ( other ) → Tensor ¶ See torch.atan() atan2 ( other ) → Tensor ¶ In-place version of asin() atan ( ) → Tensor ¶ See torch.argmax() argmin ( dim=None, keepdim=False ) ¶

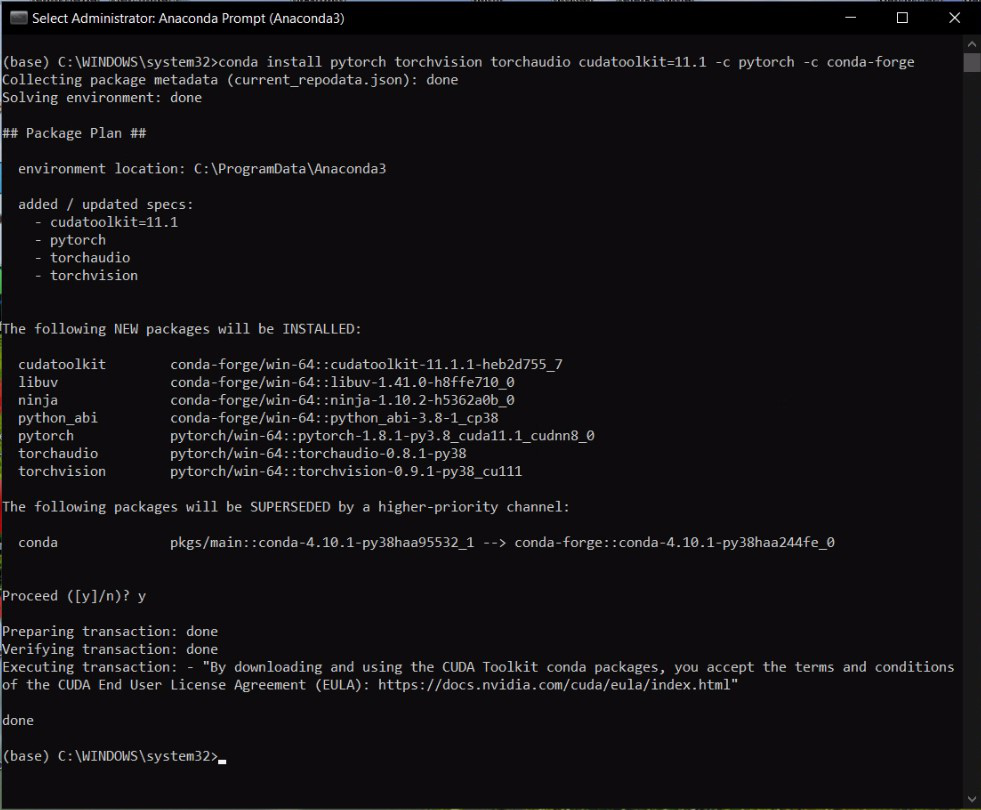

Permute pytorch code#

This function only works with CPU tensors and should not be used in code See torch.allclose() apply_ ( callable ) → Tensor ¶Īpplies the function callable to each element in the tensor, replacingĮach element with the value returned by callable. In-place version of addr() allclose ( other, rtol=1e-05, atol=1e-08, equal_nan=False ) → Tensor ¶ See torch.addr() addr_ ( beta=1, alpha=1, vec1, vec2 ) → Tensor ¶ In-place version of addmv() addr ( beta=1, alpha=1, vec1, vec2 ) → Tensor ¶ See torch.addmv() addmv_ ( beta=1, tensor, alpha=1, mat, vec ) → Tensor ¶ In-place version of addmm() addmv ( beta=1, tensor, alpha=1, mat, vec ) → Tensor ¶ See torch.addmm() addmm_ ( beta=1, mat, alpha=1, mat1, mat2 ) → Tensor ¶ In-place version of addcmul() addmm ( beta=1, mat, alpha=1, mat1, mat2 ) → Tensor ¶ See torch.addcmul() addcmul_ ( value=1, tensor1, tensor2 ) → Tensor ¶ In-place version of addcdiv() addcmul ( value=1, tensor1, tensor2 ) → Tensor ¶ See torch.addcdiv() addcdiv_ ( value=1, tensor1, tensor2 ) → Tensor ¶ In-place version of addbmm() addcdiv ( value=1, tensor1, tensor2 ) → Tensor ¶ See torch.addbmm() addbmm_ ( beta=1, mat, alpha=1, batch1, batch2 ) → Tensor ¶ In-place version of add() addbmm ( beta=1, mat, alpha=1, batch1, batch2 ) → Tensor ¶ See torch.add() add_ ( value ) → Tensor ¶ In-place version of acos() add ( value ) → Tensor ¶ In-place version of abs() acos ( ) → Tensor ¶ Is True if the Tensor is stored on the GPU, False otherwise. new_zeros (( 2, 3 )) tensor(, ], dtype=torch.float64) is_cuda ¶ Torch.Tensor is an alias for the default tensor type ( torch.FloatTensor).Ī tensor can be constructed from a Python list or sequence using the Torch defines eight CPU tensor types and eight GPU tensor types: Data type

A torch.Tensor is a multi-dimensional matrix containing elements of

0 kommentar(er)

0 kommentar(er)